The text-to-SQL task involves converting natural language questions into executable SQL queries on a relational database. While modern large language models (LLMs) excel at many generative tasks, generating correct and complex SQL queries remains challenging. In the paper RetrySQL: text-to-SQL training with retry data for self-correcting query generation, the authors introduce a training paradigm that teaches the model to self-monitor and correct its reasoning steps during generation, rather than relying solely on post-processing modules.

RetrySQL Concept

Reasoning Steps Generation

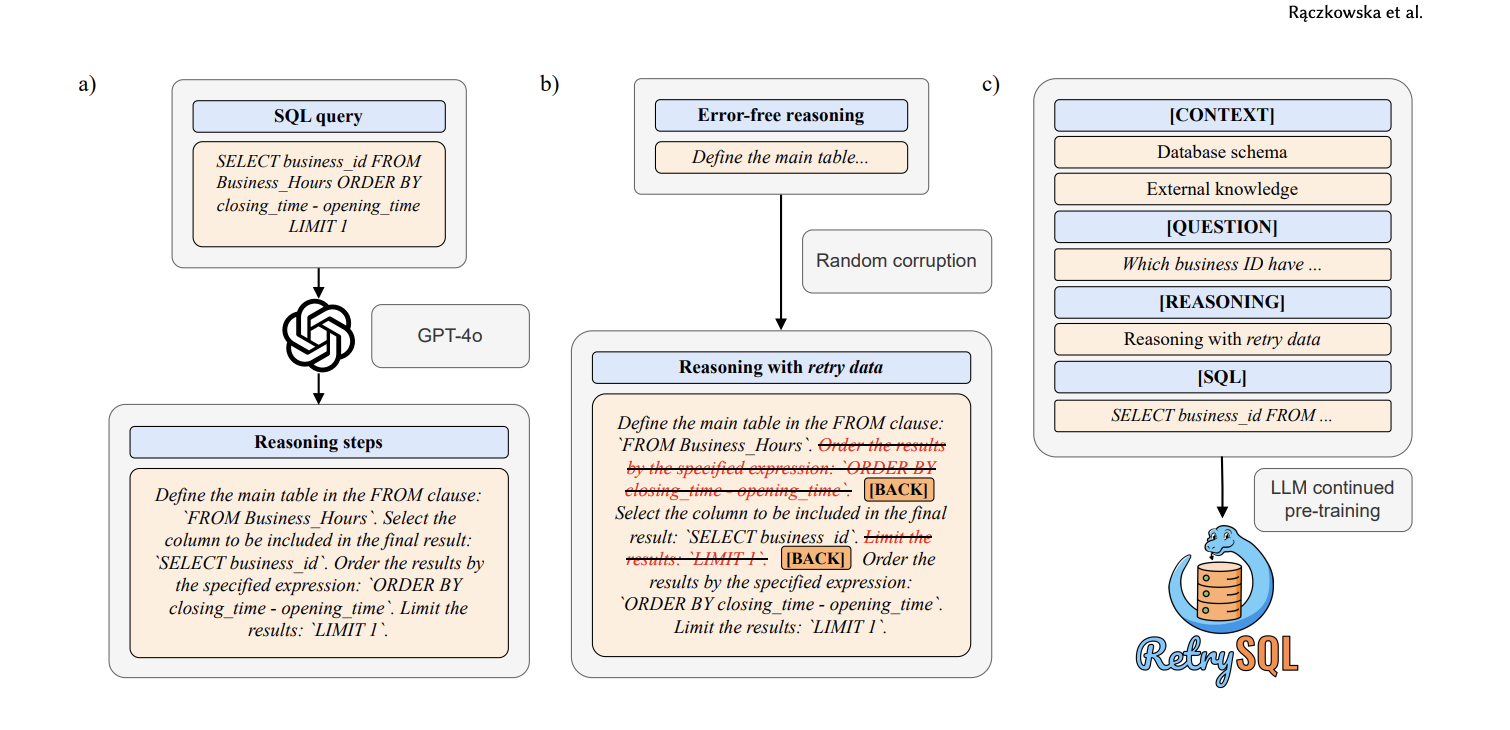

For each example in the BIRD dataset, a sequence of reasoning steps leading to the SQL query structure (e.g.,$FROM$→$WHERE$→$GROUP\ BY$) is synthetically generated using GPT-4o.Retry Data Creation

From each reasoning sequence, certain positions are randomly chosen to insert incorrect steps ($ r_{error} $), followed by the token$[BACK]$and then the correct step.

Example (Forward Single, $ p_{retry}=0.2 $):… → $r_1$ (FROM orders) → $r_{error}$: WHERE status = 'Pending' → $[BACK]$ → $r_2$ (WHERE status = 'Shipped') → …Continued Pre-Training

The OpenCoder 1.5B model, pre-trained on code including SQL, undergoes continued pre-training on the augmented BIRD dataset with retry data. The model input format is:[CONTEXT] — DDL schema + external knowledge [QUESTION] — natural language query [REASONING] — reasoning steps with retry [SQL] — correct SQL query

RetrySQL Example

Context: orders table

| order_id | status | payment_date |

|---|---|---|

| 1 | Shipped | 2024-06-05 |

| 2 | Pending | 2024-06-12 |

| 3 | Shipped | 2024-07-01 |

| 4 | Shipped | 2024-06-20 |

Natural language task:

“How many orders with status ‘Shipped’ were paid in June 2024?”

Chain-of-thought

r₁: FROM orders r₂: WHERE status = 'Shipped' r₃: WHERE payment_date BETWEEN '2024-06-00' AND '2024-06-31' ← date errorRetry

r₃_error: WHERE payment_date BETWEEN '2024-06-00' AND '2024-06-31' [BACK] r₃: WHERE payment_date BETWEEN '2024-06-01' AND '2024-06-30'Final SQL

SELECT COUNT(*) FROM orders WHERE status = 'Shipped' AND payment_date BETWEEN '2024-06-01' AND '2024-06-30';

Results and Conclusions

- Execution Accuracy Improvement: Continued pre-training with retry data (FS variant, $p_{retry}=0.2$) increased $EX_{overall}$ by approximately 4 percentage points, with the largest gains on challenging queries.

- Error-Detection Ability: Linear probing on the base OpenCoder showed a balanced accuracy of about 82% in distinguishing correct from incorrect steps, confirming the model’s latent self-correction ability.

- Competitive Efficiency: RetrySQL-trained OpenCoder 1.5B achieves execution accuracy close to GPT-4o in an end-to-end pipeline, despite having far fewer parameters.

Applications

- BI Systems and Analytics: Users can pose complex questions in natural language, and the model self-corrects its reasoning steps, reducing errors.

- Resource-Constrained Environments: Smaller open models can compete with large LLMs, lowering deployment costs.

- Research and Development: The retry data paradigm can be extended to other generative tasks, such as code generation in other languages or process planning.

📎 Links

- Based on the publication 📄 2507.02529